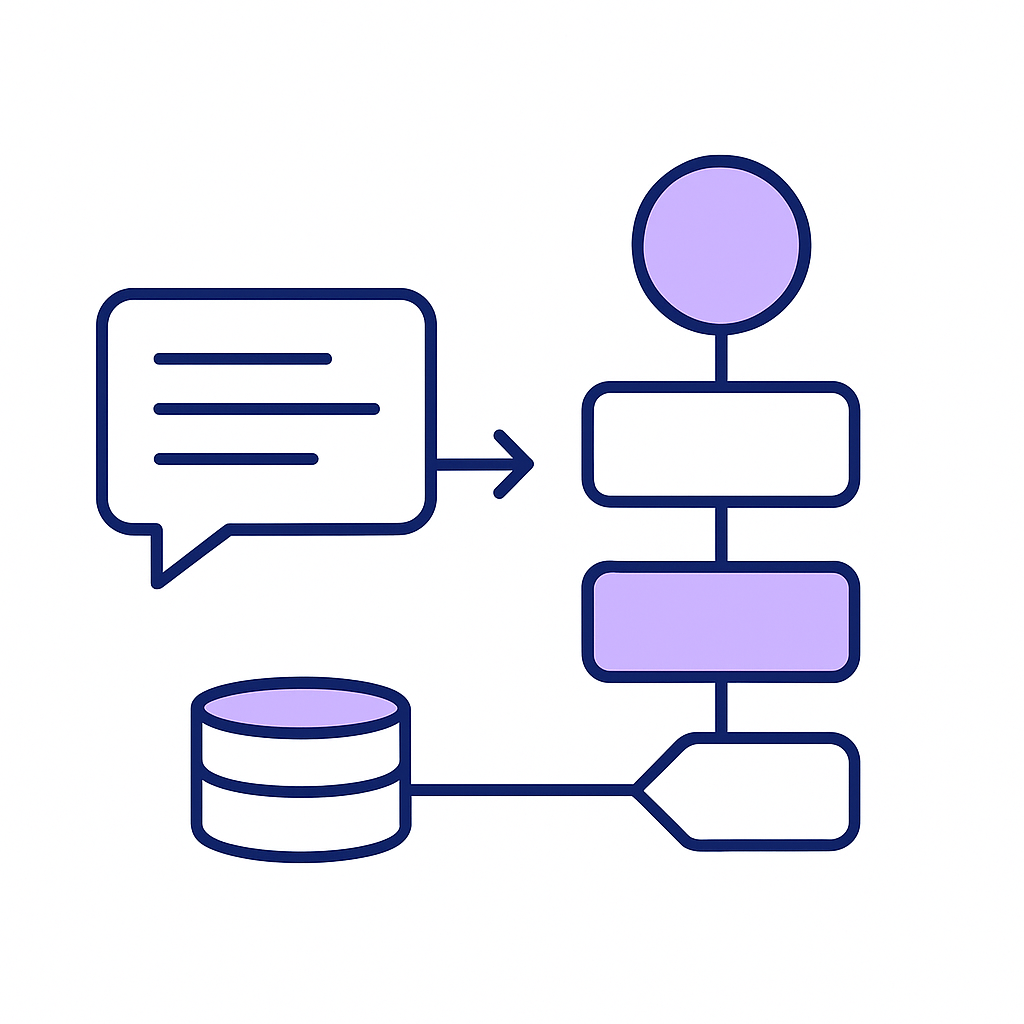

Translates NL into workflows

The HiveProcessor is the brain that takes a HiveInput (your user's prompt plus optional team/user context), stores an initial "hive_request" to the database, and then calls out to AI (via OpenAI_generate_response) to parse that prompt into a workflow:

- A UUID workflow ID

- A set of discrete tasks, each assigned to a specific agent

- Explicit dependencies between tasks

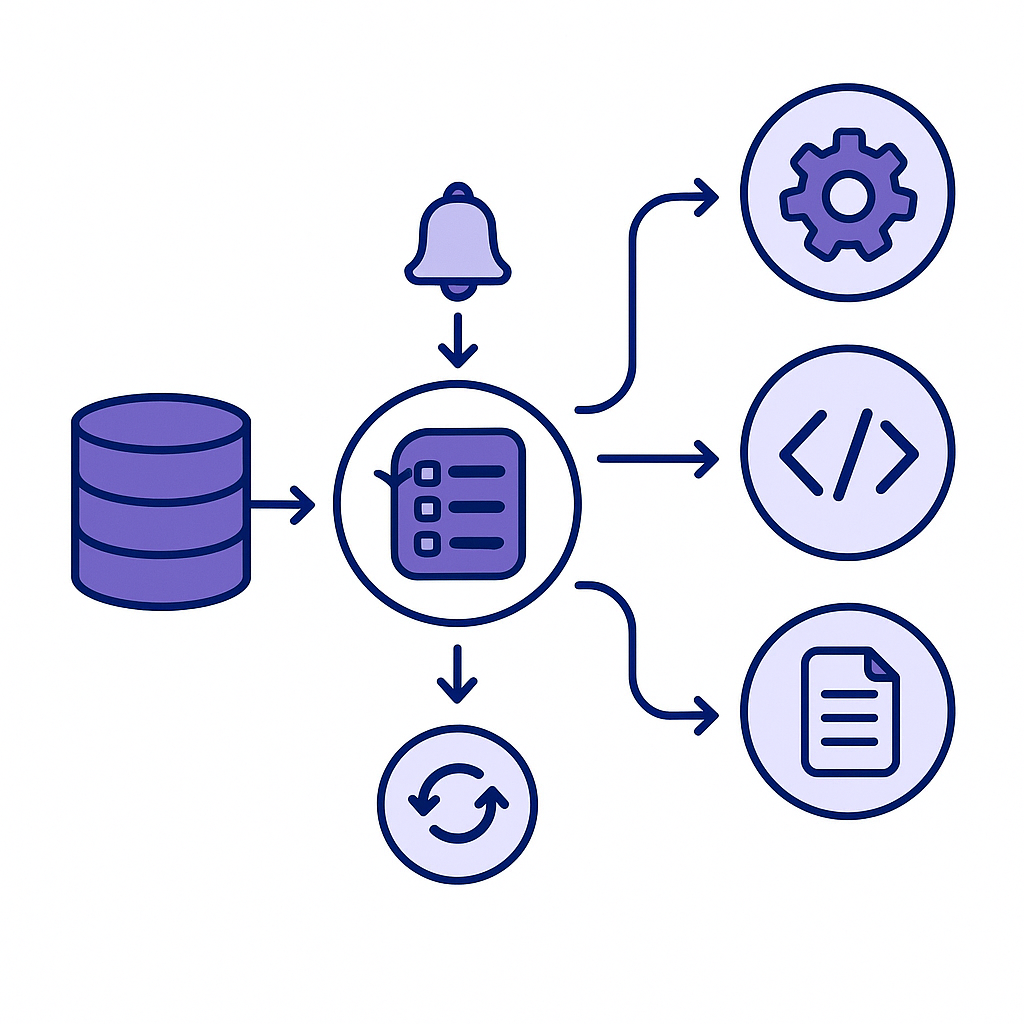

Orchestrates asynchronous task execution

Your TaskManager (a thread-safe singleton) polls the database for new "pending" tasks and optionally listens on PostgreSQL channels for notifications. It claims tasks whose dependencies are satisfied, and hands them off—via the HiveProcessor and HiveCommunicator—to the appropriate agent implementation.

Provides a unified agent framework

At the core is BaseAgent, an abstract class that loads configuration, logging, OpenAI credentials, and a HiveDB connection. It defines standard methods for input validation, progress reporting, error handling, and saving results back to the database.

Ships with a library of built-in agents

URLAnalyzerAgent

Fetches and parses web pages for structured insights

BlogAgent

Composes multi-section blog posts

AnkhAgent

Executes code snippets securely

LinkedInMonitorAgent

Tracks LinkedIn profiles and engagement

Manages agent metadata & access control

The HiveRegistry lazily loads all agent metadata from the database, enforces scope (global/team/user), and instantiates agent classes on demand. This lets you add or update agents without restarting the server.

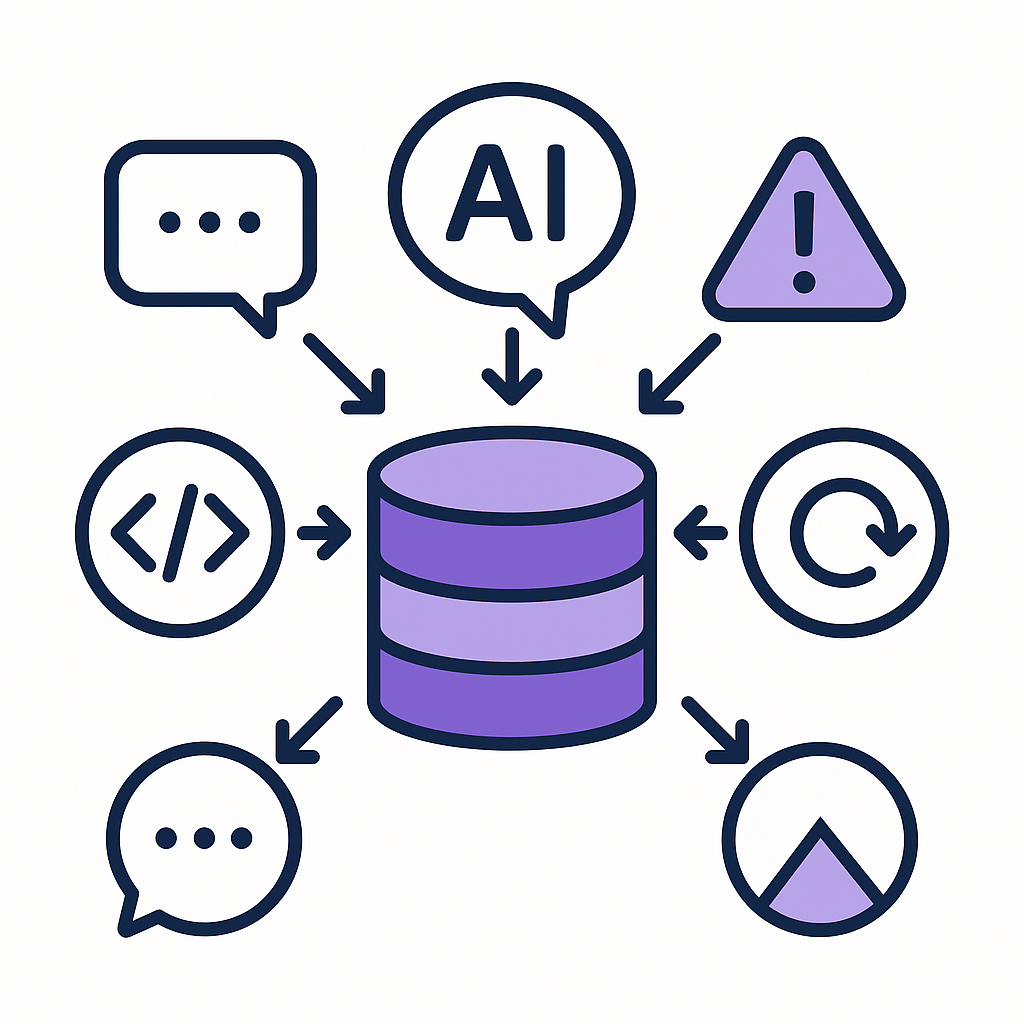

Persists everything in a central database

All requests, AI responses, agent requests and results, error logs, and status updates are stored in a Supabase/PostgreSQL backend via HiveDB. This ensures durability and lets you query or replay workflows later.